test D43470

Contents

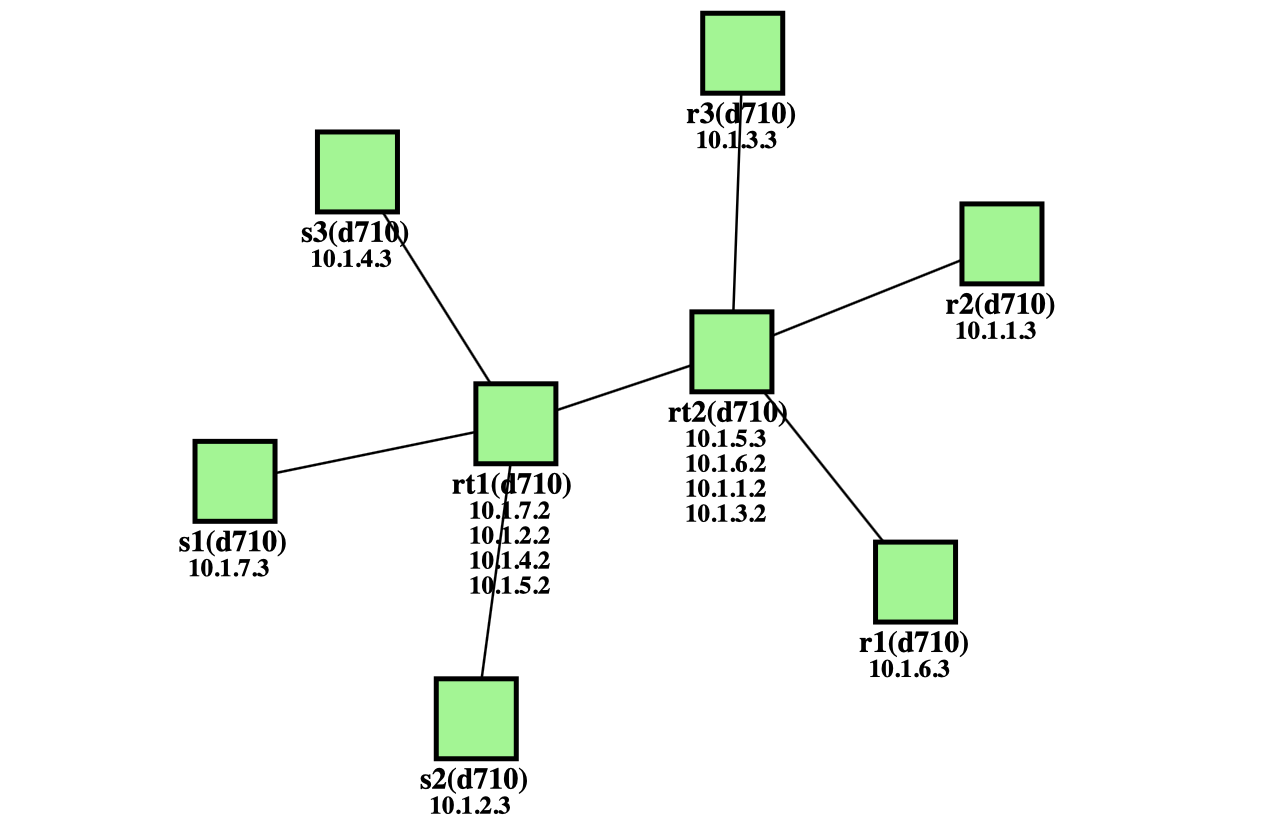

Testbed in a dumbbell topology (d710 nodes with 1Gbps links) used here are from Emulab.net: Emulab hardware

testbed design

The topology is a dumbbell with three nodes (s1,s2,s3) as TCP traffic senders using iperf3, two nodes (rt1,rt2) as routers with a single bottleneck link, and three nodes (r1,r2,r3) as TCP traffic receivers.

The s1,s2 and r1,r2,r3 are using FreeBSD 15-CURRENT, s3 is using Ubuntu Linux 22.04 to compare with TCP in Linux kernel.

The rt1,rt2 are using Ubuntu Linux 18.04 with shallow L2,L3 buffers combined around 256 packets.

There is a dummynet box generating 40ms round-trip-delay (RTT) on the bottleneck link. All senders are sending data traffic to their corresponding receivers, e.g. s1 => r1, s2 => r2 and s3 => r3.

The bottleneck link has bandwidth 1Gbps. There is background UDP traffic from rt1 to rt2 (rt1 => rt2) so that a sender's TCP traffic will encounter congestion at the rt1 node output port.

test IPv4 traffic

The version tested in FreeBSD before/after the patch is FreeBSD 15.0-CURRENT #0 main-f11c79fc00.

The version tested in Ubuntu Linux 22.04 is 5.15.0-112-generic.

version |

observation1 |

observation2 |

observation3 |

base |

cwnd starts from 1 mss in loss recovery |

seen TSO size (>mss) data in loss recovery |

fractional data size (<mss) in loss recovery |

patch D43470 |

cwnd starts large size in loss recovery |

seen TSO size (>mss) data in loss recovery |

fractional data size (<mss) in loss recovery |

Linux |

cwnd starts large size in loss recovery |

seen TSO size (>mss) data in loss recovery |

no fractional data size (<mss) in loss recovery |

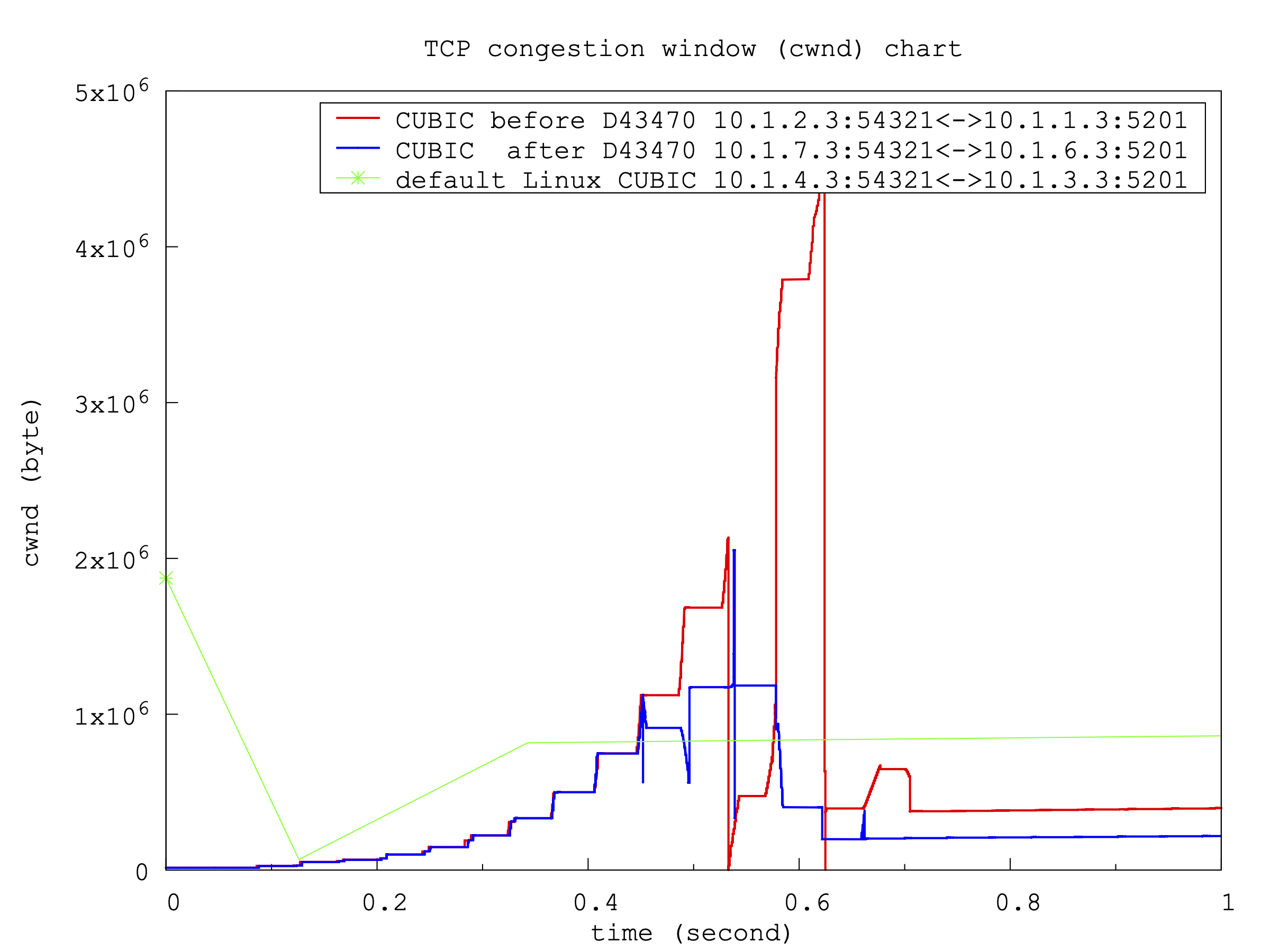

TCP cwnd before/after patch D43470 and also with Linux(in 1st second):

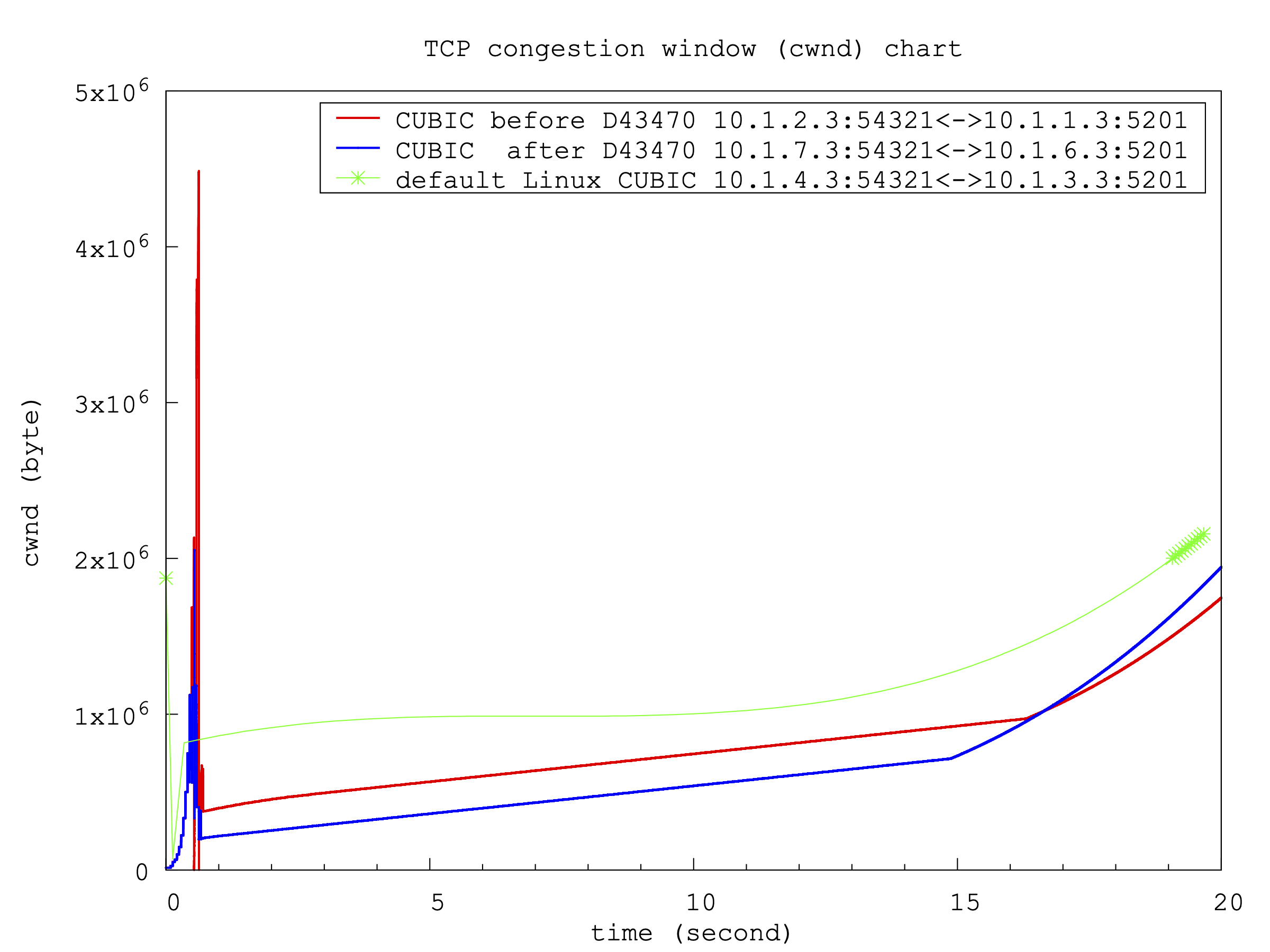

TCP cwnd before/after patch D43470 and also with Linux(in 20 seconds):

before patch D43470

The background UDP traffic is 500 Mbits/sec during the test.

background traffic:

cc@rt1:~ % iperf3 -B 10.1.5.2 --cport 54321 -c 10.1.5.3 -t 6000 -i 2 --udp -b 500m

Connecting to host 10.1.5.3, port 5201

[ 4] local 10.1.5.2 port 54321 connected to 10.1.5.3 port 5201

[ ID] Interval Transfer Bandwidth Total Datagrams

[ 4] 0.00-2.00 sec 119 MBytes 500 Mbits/sec 15257

[ 4] 2.00-4.00 sec 119 MBytes 500 Mbits/sec 15259

[ 4] 4.00-6.00 sec 119 MBytes 500 Mbits/sec 15255

...

snd side:

net.inet.tcp.sack.tso: 1 -> 1

net.inet.siftr2.port_filter: 54321 -> 54321

net.inet.siftr2.logfile: /proj/fbsd-transport/chengcui/tests/testD43470/before_fix/CUBIC/s2.before_fix.siftr2 -> /proj/fbsd-transport/chengcui/tests/testD43470/before_fix/CUBIC/s2.before_fix.siftr2

net.inet.siftr2.enabled: 0 -> 1

tcpdump: listening on bce2, link-type EN10MB (Ethernet), snapshot length 100 bytes

iperf 3.12

FreeBSD s2.dumbbelld710.fbsd-transport.emulab.net 15.0-CURRENT FreeBSD 15.0-CURRENT #0 main-f11c79fc00: Thu Oct 17 14:06:28 MDT 2024 cc@n1.imaged43470.fbsd-transport.emulab.net:/usr/obj/usr/src/amd64.amd64/sys/TESTBED-GENERIC amd64

Control connection MSS 1460

Time: Mon, 21 Oct 2024 18:16:22 UTC

Connecting to host r2, port 5201

Cookie: dcx2k5gfqeji7jewh2dhnbwcld42mj3cvdur

TCP MSS: 1460 (default)

[ 5] local 10.1.2.3 port 54321 connected to 10.1.1.3 port 5201

Starting Test: protocol: TCP, 1 streams, 1048576 byte blocks, omitting 0 seconds, 20 second test, tos 0

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-2.00 sec 24.0 MBytes 101 Mbits/sec 129 445 KBytes

[ 5] 2.00-4.00 sec 23.3 MBytes 97.7 Mbits/sec 0 519 KBytes

[ 5] 4.00-6.00 sec 26.7 MBytes 112 Mbits/sec 0 591 KBytes

[ 5] 6.00-8.00 sec 29.8 MBytes 125 Mbits/sec 0 659 KBytes

[ 5] 8.00-10.00 sec 33.3 MBytes 140 Mbits/sec 0 729 KBytes

[ 5] 10.00-12.00 sec 36.7 MBytes 154 Mbits/sec 0 800 KBytes

[ 5] 12.00-14.00 sec 40.0 MBytes 168 Mbits/sec 0 870 KBytes

[ 5] 14.00-16.00 sec 43.4 MBytes 182 Mbits/sec 0 940 KBytes

[ 5] 16.00-18.00 sec 51.0 MBytes 214 Mbits/sec 0 1.21 MBytes

[ 5] 18.00-20.00 sec 70.3 MBytes 295 Mbits/sec 0 1.68 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

Test Complete. Summary Results:

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-20.00 sec 378 MBytes 159 Mbits/sec 129 sender

[ 5] 0.00-20.04 sec 377 MBytes 158 Mbits/sec receiver

CPU Utilization: local/sender 4.9% (0.7%u/4.2%s), remote/receiver 5.1% (0.9%u/4.2%s)

snd_tcp_congestion cubic

rcv_tcp_congestion cubic

iperf Done.

net.inet.siftr2.enabled: 1 -> 0

271106 packets captured

274480 packets received by filter

0 packets dropped by kernel

-rw-r--r-- 1 root fbsd-transport 39M Oct 21 12:16 s2.before_fix.siftr2siftr2 analysis (recovery_flags is from tp->t_flags)

##DIRECTION relative_timestamp CWND SSTHRESH data_size recovery_flags o 0.533012 1448 1065742 2612399709 1453072297 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | << cwnd starts from one mss o 0.533020 2896 1065742 2612401157 1453072297 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.533025 4344 1065742 2612402605 1453072297 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.533031 5792 1065742 2612404053 1453072297 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.533036 7240 1065742 2612405501 1453072297 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.533046 8688 1065742 2612406949 1453072297 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.533051 10136 1065742 2612408397 1453072297 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | ... o 0.534082 60816 1065742 2612558989 1453072297 24616 TF_FASTRECOVERY | TF_CONGRECOVERY | << TSO size data (>mss) o 0.534087 85432 1065742 2612583605 1453072297 4344 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.534875 91224 1065742 2614558677 1453072297 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | ... o 0.535079 118736 1065742 2612706685 1453072297 37648 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.536102 156384 1065742 2614581845 1453072297 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.536108 157832 1065742 2614583293 1453072297 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.536112 159280 1065742 2614584741 1453072297 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.536117 160728 1065742 2614586189 1453072297 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.536124 162176 1065742 2612831213 1453072297 17376 TF_FASTRECOVERY | TF_CONGRECOVERY | ... o 0.574078 718208 1065742 2614115589 1453072297 17376 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.575006 700832 1065742 2614167717 1453072297 34752 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.575032 752960 1065742 2614219845 1453072297 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.576008 825360 1065742 2614292245 1453072297 14480 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.577012 932512 1065742 2614399397 1453072297 10136 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.577048 962920 1065742 2614429805 1453072297 31856 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.577073 1015048 1065742 2614481933 1453072297 14480 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.577823 1064280 1065742 2614923573 1453072297 808 TF_FASTRECOVERY | TF_CONGRECOVERY | <= fraction o 0.577829 1064280 1065742 2614924381 1453072297 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.578006 1063640 1065742 2614925829 1453072297 512 TF_FASTRECOVERY | TF_CONGRECOVERY | <= fraction o 0.578013 1064152 1065742 2614926341 1453072297 936 TF_FASTRECOVERY | TF_CONGRECOVERY | <= fraction o 0.578019 1063640 1065742 2614927277 1453072297 424 TF_FASTRECOVERY | TF_CONGRECOVERY | <= fraction o 0.578025 1064064 1065742 2614927701 1453072297 424 TF_FASTRECOVERY | TF_CONGRECOVERY | <= fraction

after patch D43470

The background UDP traffic is 500 Mbits/sec during the test.

background traffic:

cc@rt1:~ % iperf3 -B 10.1.5.2 --cport 54321 -c 10.1.5.3 -t 6000 -i 2 --udp -b 500m

Connecting to host 10.1.5.3, port 5201

[ 4] local 10.1.5.2 port 54321 connected to 10.1.5.3 port 5201

[ ID] Interval Transfer Bandwidth Total Datagrams

[ 4] 0.00-2.00 sec 119 MBytes 500 Mbits/sec 15254

...

snd side:

net.inet.tcp.sack.tso: 0 -> 1

net.inet.siftr2.port_filter: 0 -> 54321

net.inet.siftr2.logfile: /var/log/siftr2.log -> /proj/fbsd-transport/chengcui/tests/testD43470/after_fix/CUBIC/s1.after_fix.siftr2

net.inet.siftr2.enabled: 0 -> 1

tcpdump: listening on bce3, link-type EN10MB (Ethernet), snapshot length 100 bytes

iperf 3.12

FreeBSD s1.dumbbelld710.fbsd-transport.emulab.net 15.0-CURRENT FreeBSD 15.0-CURRENT #0 main-f11c79fc00-dirty: Thu Oct 17 13:29:49 MDT 2024 cc@n1.imaged43470.fbsd-transport.emulab.net:/usr/obj/usr/src/amd64.amd64/sys/TESTBED-GENERIC amd64

Control connection MSS 1460

Time: Mon, 21 Oct 2024 17:50:42 UTC

Connecting to host r1, port 5201

Cookie: tiawr7ob2azglstu4czwfovcaxbyhsewez7n

TCP MSS: 1460 (default)

[ 5] local 10.1.7.3 port 54321 connected to 10.1.6.3 port 5201

Starting Test: protocol: TCP, 1 streams, 1048576 byte blocks, omitting 0 seconds, 20 second test, tos 0

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-2.00 sec 12.7 MBytes 53.2 Mbits/sec 184 250 KBytes

[ 5] 2.00-4.00 sec 13.5 MBytes 56.8 Mbits/sec 0 320 KBytes

[ 5] 4.00-6.00 sec 16.9 MBytes 70.8 Mbits/sec 0 388 KBytes

[ 5] 6.00-8.00 sec 20.4 MBytes 85.5 Mbits/sec 0 460 KBytes

[ 5] 8.00-10.00 sec 23.8 MBytes 99.7 Mbits/sec 0 530 KBytes

[ 5] 10.00-12.00 sec 26.9 MBytes 113 Mbits/sec 0 599 KBytes

[ 5] 12.00-14.00 sec 30.3 MBytes 127 Mbits/sec 0 669 KBytes

[ 5] 14.00-16.00 sec 36.1 MBytes 151 Mbits/sec 0 885 KBytes

[ 5] 16.00-18.00 sec 52.8 MBytes 221 Mbits/sec 0 1.29 MBytes

[ 5] 18.00-20.00 sec 77.1 MBytes 324 Mbits/sec 0 1.87 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

Test Complete. Summary Results:

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-20.00 sec 310 MBytes 130 Mbits/sec 184 sender

[ 5] 0.00-20.04 sec 309 MBytes 129 Mbits/sec receiver

CPU Utilization: local/sender 4.0% (0.5%u/3.5%s), remote/receiver 4.1% (0.6%u/3.5%s)

snd_tcp_congestion cubic

rcv_tcp_congestion cubic

iperf Done.

net.inet.siftr2.enabled: 1 -> 0

223672 packets captured

225222 packets received by filter

0 packets dropped by kernel

-rw-r--r-- 1 root fbsd-transport 32M Oct 21 11:51 s1.after_fix.siftr2siftr2 analysis (recovery_flags is from tp->t_flags)

##DIRECTION relative_timestamp CWND SSTHRESH data_size recovery_flags o 0.452035 1104824 562026 729342482 1668311966 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | << cwnd starts from this large size (763 mss segments) o 0.452042 1101928 562026 729343930 1668311966 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.452047 1100480 562026 729345378 1668311966 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.452055 1099032 562026 729346826 1668311966 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.452061 1097584 562026 729348274 1668311966 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.452068 1096136 562026 729349722 1668311966 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | ... o 0.493048 732688 562026 730741250 1668311966 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.493143 699384 562026 730128746 1668311966 7240 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.494896 640016 562026 730238794 1668311966 8688 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.494904 640016 562026 730248930 1668311966 2896 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.495024 560376 562026 730263410 1668311966 10136 TF_FASTRECOVERY | TF_CONGRECOVERY | << TSO size data (>mss) o 0.495030 560376 562026 730273546 1668311966 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.495036 560376 562026 730274994 1668311966 18824 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.495982 560376 562026 730377802 1668311966 17376 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.496008 560376 562026 730412554 1668311966 17376 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.496056 1168536 333110 729342482 1668311966 1448 TF_FASTRECOVERY | TF_CONGRECOVERY | ... o 0.537937 1386568 333110 730742698 1668311966 1428 TF_FASTRECOVERY | TF_CONGRECOVERY | <= fraction o 0.537989 1192516 333110 730744126 1668311966 65160 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.537995 1192516 333110 730809286 1668311966 65160 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.537999 1192516 333110 730874446 1668311966 65160 TF_FASTRECOVERY | TF_CONGRECOVERY | o 0.538004 1192516 333110 730939606 1668311966 9640 TF_FASTRECOVERY | TF_CONGRECOVERY | <= fraction ...

compare TCP in Linux

The background UDP traffic is 500 Mbits/sec during the test.

background traffic:

cc@rt1:~ % iperf3 -B 10.1.5.2 --cport 54321 -c 10.1.5.3 -t 6000 -i 2 --udp -b 500m

Connecting to host 10.1.5.3, port 5201

[ 4] local 10.1.5.2 port 54321 connected to 10.1.5.3 port 5201

[ ID] Interval Transfer Bandwidth Total Datagrams

[ 4] 0.00-2.00 sec 119 MBytes 500 Mbits/sec 15254

[ 4] 2.00-4.00 sec 119 MBytes 500 Mbits/sec 15262

...

root@s3:~# iperf3 -B s3 --cport 54321 -c r3 -t 20 -Vi 2

iperf 3.9

Linux s3.dumbbelld710.fbsd-transport.emulab.net 5.15.0-112-generic #122-Ubuntu SMP Thu May 23 07:48:21 UTC 2024 x86_64

Control connection MSS 1448

Time: Mon, 21 Oct 2024 18:51:01 GMT

Connecting to host r3, port 5201

Cookie: n5ppgfu3qifvsp4was6o2yirknmip2vsuzzc

TCP MSS: 1448 (default)

[ 5] local 10.1.4.3 port 54321 connected to 10.1.3.3 port 5201

Starting Test: protocol: TCP, 1 streams, 131072 byte blocks, omitting 0 seconds, 20 second test, tos 0

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-2.00 sec 53.1 MBytes 223 Mbits/sec 384 1.10 MBytes

[ 5] 2.00-4.00 sec 55.0 MBytes 231 Mbits/sec 0 1.19 MBytes

[ 5] 4.00-6.00 sec 58.8 MBytes 246 Mbits/sec 0 1.22 MBytes

[ 5] 6.00-8.00 sec 58.8 MBytes 246 Mbits/sec 0 1.22 MBytes

[ 5] 8.00-10.00 sec 58.8 MBytes 246 Mbits/sec 0 1.23 MBytes

[ 5] 10.00-12.00 sec 60.0 MBytes 252 Mbits/sec 0 1.26 MBytes

[ 5] 12.00-14.00 sec 62.5 MBytes 262 Mbits/sec 0 1.34 MBytes

[ 5] 14.00-16.00 sec 67.5 MBytes 283 Mbits/sec 0 1.50 MBytes

[ 5] 16.00-18.00 sec 75.0 MBytes 315 Mbits/sec 0 1.77 MBytes

[ 5] 18.00-20.00 sec 91.2 MBytes 383 Mbits/sec 0 2.17 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

Test Complete. Summary Results:

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-20.00 sec 641 MBytes 269 Mbits/sec 384 sender

[ 5] 0.00-20.04 sec 631 MBytes 264 Mbits/sec receiver

CPU Utilization: local/sender 1.3% (0.1%u/1.2%s), remote/receiver 6.2% (1.3%u/4.8%s)

snd_tcp_congestion cubic

rcv_tcp_congestion newreno

iperf Done.

root@s3:~#

root@s3:~# tcpdump -w linux_snd.pcap -s 100 -i eno4 tcp port 54321

tcpdump: listening on eno4, link-type EN10MB (Ethernet), snapshot length 100 bytes

^C256510 packets captured

256510 packets received by filter

0 packets dropped by kernel

2nd test in Linux

Turns out it's easy to extract cwnd info from the Linux kernel by using the tracing events. This is part of my script.

echo "sport == ${tcp_port}" > /sys/kernel/debug/tracing/events/tcp/tcp_probe/filter

echo > /sys/kernel/debug/tracing/trace

echo 1 > /sys/kernel/debug/tracing/events/tcp/tcp_probe/enable

tcpdump -w ${tcpdump_name} -s 100 -i enp0s5 tcp port ${tcp_port} &

pid=$!

sleep 1

iperf3 -B ${src} --cport ${tcp_port} -c ${dst} -l 1M -t 2 -i 1 -VC cubic

echo 0 > /sys/kernel/debug/tracing/events/tcp/tcp_probe/enable

cat /sys/kernel/debug/tracing/trace > ${dir}/${trace_name}

kill $pidbackground traffic: The background UDP traffic is 500 Mbits/sec during the test.

cc@rt1:~ % iperf3 -B 10.1.5.2 --cport 54321 -c 10.1.5.3 -t 6000 -i 2 --udp -b 500m

Connecting to host 10.1.5.3, port 5201

[ 4] local 10.1.5.2 port 54321 connected to 10.1.5.3 port 5201

[ ID] Interval Transfer Bandwidth Total Datagrams

[ 4] 0.00-2.00 sec 119 MBytes 500 Mbits/sec 15258

[ 4] 2.00-4.00 sec 119 MBytes 500 Mbits/sec 15258

...

root@s3:~# bash snd.bash linux s3 r3

tcpdump: listening on eno2, link-type EN10MB (Ethernet), snapshot length 100 bytes

iperf 3.9

Linux s3.dumbbelld710.fbsd-transport.emulab.net 5.15.0-124-generic #134-Ubuntu SMP Fri Sep 27 20:20:17 UTC 2024 x86_64

Control connection MSS 1448

Time: Tue, 29 Oct 2024 15:05:58 GMT

Connecting to host r3, port 5201

Cookie: levk5bi2x37cn4i3vyf7qyasda43c2vfgmin

TCP MSS: 1448 (default)

[ 5] local 10.1.4.3 port 54321 connected to 10.1.3.3 port 5201

Starting Test: protocol: TCP, 1 streams, 1048576 byte blocks, omitting 0 seconds, 20 second test, tos 0

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-2.00 sec 44.4 MBytes 186 Mbits/sec 300 875 KBytes

[ 5] 2.00-4.00 sec 44.8 MBytes 188 Mbits/sec 0 943 KBytes

[ 5] 4.00-6.00 sec 44.7 MBytes 187 Mbits/sec 0 964 KBytes

[ 5] 6.00-8.00 sec 44.7 MBytes 187 Mbits/sec 0 964 KBytes

[ 5] 8.00-10.00 sec 44.6 MBytes 187 Mbits/sec 0 974 KBytes

[ 5] 10.00-12.00 sec 49.1 MBytes 206 Mbits/sec 0 1020 KBytes

[ 5] 12.00-14.00 sec 49.1 MBytes 206 Mbits/sec 0 1.10 MBytes

[ 5] 14.00-16.00 sec 58.3 MBytes 244 Mbits/sec 0 1.29 MBytes

[ 5] 16.00-18.00 sec 67.2 MBytes 282 Mbits/sec 0 1.60 MBytes

[ 5] 18.00-20.00 sec 89.8 MBytes 376 Mbits/sec 0 2.05 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

Test Complete. Summary Results:

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-20.00 sec 537 MBytes 225 Mbits/sec 300 sender

[ 5] 0.00-20.04 sec 525 MBytes 220 Mbits/sec receiver

CPU Utilization: local/sender 1.1% (0.1%u/1.0%s), remote/receiver 9.0% (1.3%u/7.6%s)

snd_tcp_congestion cubic

rcv_tcp_congestion cubic

iperf Done.

215291 packets captured

216847 packets received by filter

0 packets dropped by kernel

-rw-r--r-- 1 root fbsd-transport 2.8M Oct 29 09:06 s3.linux.trace