This article outlines some generic routing-related problems in our network stack and describes the solutions proposed in projects/routing

Contents

Current problems

Performance issues

Many people considered routing lookups slow (and created various mechanisms of avoiding such lookups, like route caching in tunnels (or even protocols), or having per-flow hash record (FLOWTABLE)).

Typical packet processing (forwarding for router, or output for web server) path consists of:

- doing routing lookup (radix read rwlock + routing entry (rte) mutex lock)

- (optionally) interface address (ifa) atomic refcount acquire/release

- doing link level entry (lle, llentry) lookup (afdata read rwlock + llentry read (or write) lock)

Actually, given number of locks is roughly like having single Giant lock: single-cpu performance (600-1mpps) is very similar to the results you can get using modern dual-cpu system with 16/32 cores. The most expensive operation is acquiring struct rtentry mutex (think about default route delivering HUGE contention on single lock, which is also hold for plenty of time). However, other locks do heavily impact processing path.

Routing code itself

Dealing with performance issues revealed number of problems which also have to be dealt with:

- Routing code _requires_ every consumer knows about its internal implementation (radix+sockaddr) making it impossible to hack

too generic and inefficient structures (sizeof(struct rtentry) > 128 bytes for IPv6 on amd64), IPv4 full-view > 100M)

- Complex low-level api making life hard for consumers (sockaddrs, "address ifp", check route is up, check ifp, check mtu..)

LLE code

While a really good job of decoupling L3/L2 was done in 8/, it was not completed. LLE code suffers from the same problems:

- Implementation code is mixed with consumer code (in_arpinput(), for example) and randomly split in several different files (in.c if_ether.c / in6.c nd6.c )

- Exports all the details to external callers (hash table, struct llentry, etc..)

- Method callback not finished - most of things goes inline (like deleting objects in lookup handler)

- ..or even allocating memory while holding AFDATA write lock

- Uses sockaddr instead of plain addresses (see below)

Proposed solution

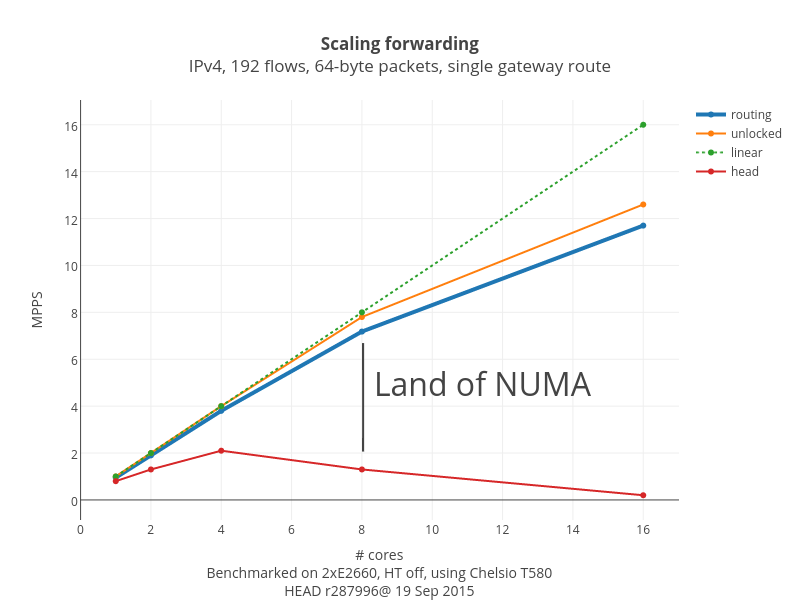

Most of the performance issues described in "Problems" sections were addressed in projects/routing branch (some already merged to HEAD). We managed to remove rwlocks/mutexes from hot path, so these changes permits us to scale close-to linear based on number of cores. In fact, we were able to reach close to 12MPPS for IPv4 forwarding (2xE2660, 8 cores) which is 5-10 times faster than current benchmarks.

Some benchmarks

Forwarding benchmark

- "routing" represents projects/routing branch results

- "unlocked" represents projects/routing branch with route/llable rmlock calls commented out

- "linear" represents linear appoximation of single-core projects/routing results

- "head" represetns HEAD@r287996 branch

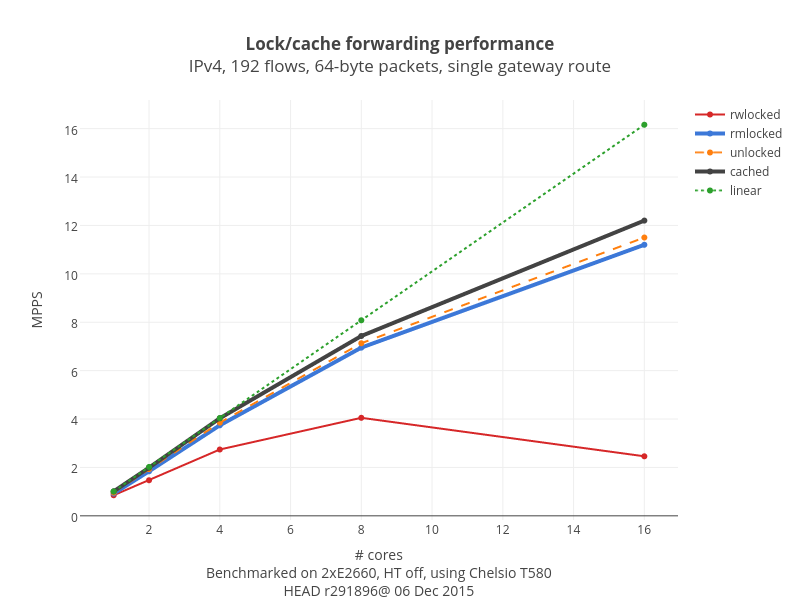

Forwarding/locking benhmark

- "rwlocked" represents HEAD w/o lle read lock/rtentry lock (lltable/radix are rwlocked)

- "rmlocked" represents HEAD w/o lle read lock/rtentry lock (lltable/radix are rmlocked)

- "rmlocked" represents HEAD w/o lle read lock/rtentry lock (lltable/radix are also not locked) (e.g. this is performance w/o locking impact

"cached" represents HEAD w/ lle & rtentry passed to ip_fastwrd (e.g. this is performance w/o locking AND w/o radix/lltable lookup impact)

- "linear" represents linear appoximation of single-core "cached" results

High-level overview

The key concept is hiding all routing internals and providing pre-computed easy-to-use result to all consumers with minimal locking. We provide separate functions for each address family (fib4/fib6/rib). We also provide several different (in terms of returned info and relative overhead) methods of retrieving routing data. Typically, lookup result is pre-computed next hop data, copied to caller-provided 64-bytes nhop_* structure, with (optionally) all necessary data _including_ the data that needs to be prepended to mbuf (currently - pre-computed L2 header).

Locking changes

Route table is switched to ipfw-like dual-locking mode: fast path is protected with rmlock, configuration changes requires to acquire cfg rwlock AND write rmlock.

- rte mutexes are mostly unused leading to single rmlock necessary for L3 lookup

- LLE if_afdata lock uses the same dual-locking approach. (there are plans to move lltable lock inside lltable)

- Additionally, number of steps are taken to avoid acquiring lle rwlock as much as we can

New api/structures are described separately in API page.

More details on routing

Routing customers can be split in several categories:

- check-rtable users (firewalls doing uRPF, various prefix checkers).

- Here we don't need to do ANY refcounting, we need to provide very basic info on most specific match using optimised fib table lookup.

- L4 level code (like TCP, trying to extract some useful info like MTU)

- Here we might need to provide things like MTU or some L4-specific data saved in given rtenry.

- Forwarding code (_output() routines)

- Here again we need very basic info, so we can use optimised fib table lookups.

- Control plane code (rtsock, address routines)

- Use slower rib-based procedures returning individual rtentries

More details on LLE

- LLTABLE was changed to provide more callbacks (like add/del/dump/hash/enumerate items) without enforcing particular storage (e.g. hash table) model.

- struct llentry is now basically split into 2 pieces:

- all fields within 64 bytes (amd64) are now protected by both ifdata lock AND lle lock e.g. you require both locks to be held exclusively for modification. All data necessary for fast path operations is kept here. other control-specific fields are protected by lle lock

- simple state machine was added to ARP: in most cases (unless record is going to expire in several seconds) we can simply use lle without side effects.

- However, prior to expire time we have to get some sort of feedback from data path indicating if entry is really used. This is done by (mutex-locked) setting .r_skip_verify field to 0, which is checked in timer code

- ND state machine started to be more complex: same trick with .r_skip_verify field was applied there

Regressions

No more ifa packet accounting (can be observed in netstat -s). It was not always accounted correctly (aliases, IPv6 SAS) and now needs to be killed

- No more per-rte packet accounting (seen in netstat -rn)

Merge plan

Basic plan looks like the following:

Merge most of LLE changes not related to locking/timers (e.g. all new lltable callbacks/enumerators/etc)

- Perform one-by-one conversion of non-critical route(4) consumers [PARTIALLY DONE]

- Discuss/merge new routing lookup API for data path

Discuss/merge lltable locking (afdata rwlock -> lltable rmlock+rwlock)

- Discuss/merge routing locking changes

Status

Current conversion status is available here.

Considerations/Open questions

- Wider use of rmlock in kernel. Rmlock has side effect of "pinning" current thread which might lead to more severe consequences when using (contested) mutexes/rwlocks.

- Here we try to avoid acquiring any additional locks as much as possible (no locks for routing lookups, rare lookups for LLE stuff). However, more stuff could be done (for example, explicitly avoid acquiring lock when sending LOTS of traffic to single dead host with no L2 lle info). Additionally, write lock costs a LOT more, so some sort of batching should be considered for inserting kernel routes/lle entries.

- Could we teach PFIL to start looking at mbuf starting from certain offset? (eliminate 2x LLE header copy)

- "slowpath" netisr queue: we'd better delay handling of some packets (like first packet being sent to host, triggering creation of LLE record) to separate netisr queue to batch such cases (since LLE link requires WLOCK)

- Should be insert link-local routes into RIB?

- Typical routing lookup now looks like the following: "What is egress interface/gw for link-local address X and embedded scope Y?" "You know, it is interface route and the egress interface is Y!"

Future plans

- Implement auto-growing array for per-fib-per-af nexthops

- Implement LLE tracking for nexthop calculations to be able to immediately provide all necessary info in single route lookup for gateway routes

- Implement multipath support for rtsock

- Implement "tracked nexthops" for tunnels (e.g. _proper_ nexthop caching) permitting single-lookup result for tunnel traffic

- Implement API for modular per-AF FIB lookup (think about sorted array of prefixes for single-interface-with-default boxes, or IPv6 hash-based routing, or special algos like DIR-24-8 or DXR for holding full-view)